Las Vegas, January 2009: The Audi A6 Avant cruises at 70km/h through the heavy traffic heading south on Interstate 15. The Audi engineer next to me sits behind the wheel, hands on his legs and feet off the pedals. The A6 is driving itself, automatically adjusting to the ebb and flow of the traffic, changing lanes as the engineer taps the indicator stalk.

Audi calls the system Piloted Driving and says the technology will be available on a production Audi in five years’ time. Piloted Driving, says Bjorn Giesler, one of the engineers developing the system, is designed to allow drivers to let the car do the boring stuff. “Our cars will always be for people who like to drive and like performance. But driving in a traffic jam is not fun and not interesting. So, we offer the opportunity for the driver to let the car do this for them.”

New York, March 2018: Autonomous vehicle specialist Waymo, which began life as Google’s self-driving car project in 2009, announces it is buying 20,000 Jaguar I-Pace electric vehicles as part of the company’s plan to launch a fully autonomous commercial ride-hailing service – in other words, taxis without human drivers – in 2020. Waymo and Jaguar Land Rover engineers will work in tandem to build these robo-taxis to be self-driving from the start, rather than retrofitting them after they come off the assembly line.

“We’re not a car company,” John Krafcik told me when he was CEO of Waymo. “We’re not making cars. We don’t have to worry about monthly sales figures, we don’t have to worry about the facilities required to tool new vehicle architectures. We’re focused on building the driver. That’s all we’re focused on. We want to build the world’s safest, most experienced driver.”

Autonomous vehicles – self-driving cars and trucks – have been the car industry’s Next Big Thing for almost 20 years now. Even longer for the industry’s real futurists: General Motors was experimenting with autonomous drive systems almost 70 years ago, building two concept cars – Firebird II in 1956 and Firebird III in 1958 – plus a number of Chevy test mules equipped with primitive sensors that would allow them to automatically follow wires buried under the surface of the road.

GM’s system relied on its autonomous cars passively interacting with smart infrastructure that was expensive to build and maintain, and as rigidly inflexible as a railway line. But with the explosive growth in computing power and speed since then, combined with a dramatic plunge in cost – the average smartphone has eight thousand times the memory and is a million times faster than the US$7million Cray X-MP supercomputer that was state of the art in the 1980s – pundits have long predicted that by the early 21st century regular cars wouldn’t need clever roads. They’d be smart enough to figure out how to drive themselves anywhere.

It hasn’t happened. Yet.

Enthusiasts may recoil, but the idea of cars that drive themselves, smart cars controlled by machine logic rather than human emotion, is a seductive one. According to Nissan researchers, 93 percent of all road crashes are the result of driver error. Autonomous vehicles would in theory eliminate those. As such, their appeal to government policymakers around the world is enormous.

A significant reduction in road crashes would ease pressure on public health systems. Police and firefighters could spend more time on solving crimes and preventing fires instead of catching speeders and cleaning up the grisly mess at crash scenes. Existing highway networks could be used more efficiently, smart cars automatically running centimetres apart, nose-to-tail in fuel-efficient ‘platoons’. Traffic congestion in cities could be better managed, with smart cars not only working together to optimise use of the road space, but also easily allowing the implementation of tolls and parking fees.

Best of all, governments wouldn’t have to spend vast sums of public money to make it happen. All they would have to do is mandate smart cars. Consumers would pay the cost of all the enabling technologies when they purchased, leased, or rented them.

What’s more, selling the idea of smart cars to that overwhelming majority of car buyers who really aren’t interested in driving wouldn’t be that hard: I can eat breakfast and do my email in clean, air-conditioned comfort with my favourite tunes banging on the sound system while I’m heading to the office? I can send the car out to pick up the kids from footy practice while I make dinner? I can summon my car to the door of the restaurant and get it to whisk me home after I’ve had a few drinks? I can stretch out and sleep while my car takes me to Melbourne? Sign me up!

In 2009 those scenarios seemed just around the corner. More than a decade later, they’re still around the corner and a long way up the road. But the problem with autonomous vehicles isn’t really one of technology. It’s one of ethics.

What if that crash fatality was the result of behaviours programmed into an autonomous vehicle?

You’re CRUISING down the middle lane of the freeway in heavy traffic, following a truck. On your right is a shiny new Volvo with a Baby on Board sticker in the window. On your left, a motorcyclist. Suddenly, a large heavy object falls off the back of the truck, right in your path. There’s no chance of stopping. What do you do? Stay in your lane and brace for the head-on hit? Swerve left and take out the motorcyclist? Or dive to the right and ram the Volvo?

You’ll simply react, stomping the brake pedal and swinging the wheel one way or the other. You’ll only think of the consequences – the dead motorcyclist or the badly injured baby – when the shock wears off and your hands stop shaking and you’re lying there in the dark wondering if you’ll ever sleep again.

But what if you did have the ability to analyse the situation in real time as it unfolded in front of you, and logically determine a course of action? What would you do? Prioritise your own safety by aiming for the motorcyclist? Minimise the danger to others at the cost of your own life by not swerving? Or take the middle ground and centrepunch the Volvo, hoping its high crash safety rating gives everyone a chance of survival?

In the crash scenario outlined above, any consequent death would be regarded as the result of an instinctual panicked move on the part of the driver, with no forethought or malice. But what if that fatality was the result of behaviours programmed into an autonomous vehicle?

“That looks more like premeditated homicide,” says Patrick Lin, director of the Ethics and Emerging Sciences Group at California Polytechnic State University, San Luis Obispo. Why? Because optimising an autonomous vehicle to ensure it minimises harm to its occupants in such a situation – something we’d all want the one we’re riding in to do – involves targeting what it should hit.

A crash is a catastrophic event, but at its core is a simple calculation: force equals mass times acceleration. Designing a vehicle that helps its occupants survive a crash is therefore fundamentally an exercise in slowing its rate of deceleration (the numerically negative form of acceleration) during the crash event, usually by engineering crumple zones around a strong central passenger cell.

Autonomous technology adds an active element to that calculus: when a collision is unavoidable, it has the potential to be able to direct the vehicle to hit the smallest and lightest of objects – the motorcycle rather than the Volvo, for example – to enhance the probability its occupants will survive. That outcome is the direct result of an algorithm, not instinct. So, who bears responsibility for the death of the motorcyclist? The programmer who wrote the algorithm? The carmaker that determined such an algorithm should be part of its autonomous vehicle’s functionality? Or the person whose journey put the motorcyclist at risk?

The roads are the easy bit. Figuring out how to navigate that sort of moral maze is why the deployment of autonomous vehicles on our roads has failed to match the rosy predictions made little more than 10 years ago.

And it’s why the development of autonomous vehicles has become a race between those – mainly existing carmakers – who see autonomous driving as the ultimate function of ever more sophisticated iterations of the advanced driver-assist systems (ADAS) they’ve already created to help humans behind the wheel, and those – mainly tech startups such as Waymo, Cruise, Zoox and Aurora – who are convinced it can only be done by creating highly skilled and omniscient digital drivers.

Back to basics: What do we mean by ‘autonomous vehicle’? The Society of Automotive Engineers (SAE) standard J3016 defines it by way of categorising the levels of driving automation, Level 0 being no automation whatsoever and Level 5 being full vehicle autonomy (see table below).

Level 2 autonomy – steering and braking and acceleration support for the driver, plus lane keeping and adaptive cruise control – is relatively common ADAS technology, even on mainstream brands. Audi’s 2017 A8 was the first production car with Level 3 capability – meaning the vehicle could completely drive itself for unlimited periods within a strict set of operating parameters – but the system, called Traffic Jam Pilot, was never activated as it was not legally able to be used on the road.

Mercedes-Benz’s timing was better. With Level 3 autonomous operation made legal in Germany from January 2021, both the new S-Class sedan and EQS electric car can operate as Level 3 autonomous vehicles using its Drive Pilot system but are only permitted to do so on motorways at speeds up to 60km/h. Honda’s Japanese-market Legend Hybrid EX fitted with the company’s Sensing Elite safety system is also Level 3 capable. It has been approved by authorities for use in Japan, but, again, only on freeways and at speeds up to 60km/h.

Other manufacturers have ADAS systems that are close to Level 3 capable. Ford’s BlueCruise can operate hands-free in designated ‘Blue Zones’, but Ford insists the system does not replace “the driver’s attention, judgement and need to control the vehicle”. GM has announced an enhanced version of its SuperCruise system that will include lane change on demand and the ability to tow a boat or a caravan hands-free.

“Level 3 is where it gets interesting,” said David Cooke, senior associate director of the Ohio State University Center for Automotive Research, in a recent interview with American trade publication Automotive News. “In Level 2 the human is always in charge. [In Level 3] the vehicle is in charge, until it’s not.”

And that’s a big problem. Tests have shown that, relieved of the task of mentally and physically controlling their vehicles, drivers can take up to 15 seconds to reacquaint themselves with what’s happening around them on the road. Meanwhile, Level 3 systems can switch full control back to the driver in as little as one second. That’s a recipe for disaster. And Tesla’s much-hyped Full Self-Driving (FSD) system proves the point.

FSD is a $10,000 upgrade to Tesla’s Autopilot system the company hints will ultimately deliver Level 3 or even Level 4 capability. Officially, FSD is a Level2 system, as Tesla acknowledges in the fine print on its website: “The currently enabled features require active driver supervision and do not make the vehicle autonomous.” But Tesla has configured FSD to allow it to operate much like a Level 3 system with the legislative safety guardrails removed and is allowing customers to test it in real time on real roads.

In April 2021, America’s National Highway Traffic Safety Administration (NHTSA) began examining 39 incidents involving advanced driver-assist systems since 2016 that resulted in 12 fatalities. It says 33 of those incidents involved Tesla vehicles.

NHTSA is also investigating 12 incidents in which Tesla vehicles with Autopilot activated hit stationary police and fire vehicles. Automotive News says in the aftermath of these incidents, one of which resulted in a fatality, Tesla pushed an over-the-air update for its Autopilot software, but NHTSA wants to know why the company went ahead with the update without issuing a recall.

The FSD development process reveals how Tesla is behaving like a Silicon Valley tech company rather than a traditional carmaker. It’s building the automotive equivalent of ‘minimum viable products’, using a feedback loop from consumers to continuously improve performance and functionality. The problem is, unlike updating a smartphone or an app or a game, real-world testing of autonomous driving software can – literally – be a matter of life and death.

Though much less cavalier in their approach than Tesla, traditional carmakers also believe the evolution of ADAS technologies will eventually endow their mainstream vehicles with Level 4 autonomous capability and give them the ability to build their own Level 5 autonomous vehicles if they wish, as Volkswagen hinted at with its Sedric concept. John Krafcik, now the former CEO of Waymo, disagrees: “There is really no path from Level 2 to Level 4,” he says. “There’s a huge chasm. It’s a completely different mindset.”

Indeed, Waymo’s fifth-generation digital driver relies on 29 cameras, four perimeter lidars and one 360-degree lidar (lidar is like radar but uses light pulses rather than radio waves) and an imaging radar system. Teslas have eight cameras, and most other ADAS-equipped cars have fewer than that. Virtually none have lidar, although Volvo has announced its next-gen XC90, due for launch within the next 12 months, will be lidar equipped.

Waymo boasts of having completed more than 30 million kilometres of self-driving testing on real roads, and more than 16 billion kilometres of testing in simulation. It’s slow and expensive work – despite being bankrolled by Google parent company Alphabet for more than a decade, Waymo has been forced to raise US$3.7 billion in additional funding in the past two years. Others working on Level 4 and Level 5 autonomous vehicles, such as ride-sharing services Uber and Lyft, have simply given up.

Despite the careful hardware engineering, despite the sophistication of their software, even the ground-up autonomous-vehicle developers – backed by the deep pockets of Alphabet, Apple, Amazon and Microsoft – are still grappling with the same fundamental problem as the lower-cost ADAS developers: What level of risk related to the use of autonomous vehicles is socially acceptable, and where does the responsibility for that risk reside?

Amnon Shashua is the chief executive of Mobileye, whose camera-and-software-based technology features in 70 percent of carmakers’ existing ADAS systems and is working on a full self-driving technology stack. He suggests that even if a Level 4 system can get to the point of crashing only once every 1.6 million kilometres – which means it’s twice as good as the average human driver – people would still be wary of using it.

“If I drive at 16km/h that means I crash once every 100,000 hours of driving,” Shashua says. “So, if I deploy 100,000 cars, I’ll have a crash every hour. From a business perspective that is very, very challenging.”

Yep. And it’s not just the potential reluctance on the part of autonomous vehicle users that’s the problem: Politicians are highly unlikely to want to be seen to be backing a new technology that is perceived to be less safe than travel by rail or commercial aircraft.

Against that background, most pundits now believe that the mass adoption of purpose-built Level 4 and Level 5 autonomous vehicles is 10 to 20 years away. In the interim, they’ll be rolled out in carefully geo-fenced locations as robo-taxis, mainly in urban areas where conventional traffic is limited or even banned.

And that gives those closely following the ADAS evolution strategy a real opportunity.

We’ve grown up with cars with steering wheels and pedals. We’re familiar with both the risks and the rewards of travelling in them. They have safety features we know and understand. Even if they can drive themselves, the format is a comfortable one, for unlike in a Level 5 autonomous vehicle, which may have nothing inside but seats and screens, we feel we can always take back control.

Indeed, Georges Massing, senior manager digital vehicle and mobility at Mercedes-Benz, says moving ahead with the development of Level 4 and Level 5 self-driving technologies for series production, building on the experience already learned with Level 2 and now Level 3 ADAS, is the next logical step for the company.

Autonomous vehicles aren’t coming. They’re here. We’re just figuring out how to use them.

How the car will change the world (again)

Autonomous vehicles have the potential to reshape our cities – even society itself

The car is the machine that changed the world. From superhighways to supermarkets, it’s transformed our cities and our societies. Autonomous vehicles will change it again. There’s very real potential for our cities to become less congested, more liveable.

Because the vehicles we own and drive sit parked 95 percent of the time, we’ve had to make room for them, says John Krafcik, who has headed both Hyundai’s US operation and, more recently, autonomous vehicle technology specialist Waymo.

Krafcik says the US has four parking spaces for every car on its roads. “That’s about a billion parking spaces,” he says. “The amount of land in our cities dedicated to the car is significant. About 30 percent of traffic in cities like New York are cars looking for parking spaces, so if we can figure out a way of getting rid of parking spaces and excess traffic looking for parking spaces, cities can start looking very different.”

“Siri, make me a hero”

Why build a driver’s car when the car can do all the driving?

When Mercedes-Benz builds a fully self-driving vehicle, it will be the Mercedes-Benz of autonomous cars, imbued with everything the three-pointed star stands for. The idea of an autonomous Volkswagen or Toyota isn’t an intellectual stretch, either, as they are brands that have long specialised in providing automobility for the masses.

However, for carmakers like Ferrari and Porsche, Lamborghini and McLaren, autonomous cars present a fascinating existential challenge: Why build a driver’s car when the car can do all the driving?

Aston Martin boss Tobias Moers thinks autonomous driving capability can be an opportunity for a performance brand. In his previous role as head of Mercedes-AMG, Moers envisioned owners being able to switch their cars to a high-performance autonomous driving mode during track days and have a digital version of AMG brand ambassador and former F1 driver coach them in real time.

As the car drove itself around a circuit with Schneider levels of speed and precision, augmented reality technologies would show the optimal braking and acceleration points and the right lines through corners. The on-board software and drive-control systems could then be set to progressively reduce the assistance provided by the car and allow the driver to take more control as their skill and confidence improved.

“Autonomous cars will democratise motorsport,” Moers said.

If he’s right, maybe we enthusiasts can relax a little. Maybe smart cars could also teach us to be smarter, faster drivers.

In for the long haul

Will the trucking industry be the first to go driverless?

Work on fully autonomous trucks is accelerating. In May 2021, an autonomous truck developed by California-based startup TuSimple travelled from Nogales in Arizona to Oklahoma City, a distance of about 1450km, in just 14 hours. With a human driver and legally mandated rest stops, it would normally have taken 24 hours.

While getting a Level 5 autonomous vehicle to work in all environments is tricky, autonomous trucks are seen as being initially more viable, particularly in the US as they would travel on freeways with clearly marked lanes and relatively gentle curves and gradients.

And the business case behind getting the technology to work is compelling: apart from solving the chronic driver shortage that’s now affecting most developed countries, autonomous long-haul trucks that are significantly less likely to crash and don’t need rest stops have the potential to lower per-kilometre transport costs by as much as 30 percent, says Deloitte, a consultancy firm.

Traditional truck manufacturers have done the maths: TuSimple is already working with Volkswagen’s heavy truck group, and other autonomous vehicle technology specialists are following suit: Waymo has signed a deal with Daimler, and Aurora is partnering with Volvo trucks.

A bridge too far?

The rivers of autonomy gold remain out of reach

The prospect of self-driving cars – surely the biggest automotive grift of the late 2010s – is exciting. Whether you’re personally interested in how they could help the dull parts of your motoring life isn’t really the point, I would wager; rather, the huge social impact they could have.

Our ageing population would be able to stay in their homes longer. Those without the physical ability to drive wouldn’t have to rely on a patchwork of public transport options that treat them as an afterthought. Being able to load yourself into a car that arrives on-demand or your own fully autonomous car, tailored to your needs, would change many lives for the better. For millions more drivers it would make the task of driving a thing of the past, where they could get on with whatever they want while the car takes them to where they want to go.

We are a long way from this dream becoming a reality. And the reason is simple – we are not at the point where the hardware and software can deal with the vast mess of information and events that is all part of driving a car without at least occasionally asking a human to step in.

The US-based Insurance Institute for Highway Safety (IIHS) carried out a study co-authored by the organisation’s vice-president, Jessica Cicchino. Cicchino said, “It’s likely that fully self-driving cars will eventually identify hazards better than people, but we found that this alone would not prevent the bulk of crashes.”

As Tesla is learning, real-world testing of autonomous driving software can – literally– be a matter of life and death

Safety is often touted as a key reason for pushing autonomous driving, but the IIHS – with a clear vested interest in eliminating, or at least reducing crashes – isn’t convinced. The people building entire companies around the technology aren’t exactly brimming with confidence either.

The CEO of artificial intelligence firm Argo AI, Bryan Salesky, wrote on his blog in 2018, “We’re still very much in the early days of making self-driving cars a reality. Those who think fully self-driving vehicles will be ubiquitous on city streets months from now or even in a few years are not well connected to the state of the art or committed to the safe deployment of the technology.” Salesky had been working on the technology for almost a decade.

Salesky’s declaration came not long after Ford invested $1 billion into Argo. Salesky, now 40, told The Merge podcast that Level 5 autonomy was unlikely in his lifetime. Ford’s money was supposed to get it the first self-driving Blue Oval car by 2022. That’s not happening.

Uber dropped its attempts to develop its self-driving systems in-house. Part of Uber’s business plan was to eliminate the most expensive – and pesky – part of its business model, the driver. Drivers expect payment, fair conditions and to be looked after, and Uber was very keen to not have to do any of these things. The company underestimated the difficulty of developing autonomous cars technology and handed it over to the recently listed Aurora, which itself is run by ex-Waymo and ex-Google autonomous vehicle pioneer Chris Urmson.

Urmson told The Atlantic in March 2018 that making a self-driving car is easy. It’s getting it to operate safely on the road that’s the problem. “You go get a couple of graduate students together, you get a car, you download ROS, and you can probably get a self-driving car driving around a parking lot within six months. The challenge, of course, is in the details. Let’s understand how to get to something that is efficient and safe to be out on the road.”

He declared during his time at Waymo that he didn’t want his son to have to get a driver’s licence. He is now teaching his son to drive.

We recommend

-

News

NewsVolkswagen debuts autonomous ID. Buzz at IAA Munich

Autonomous version of electric van set to be given 2025 production berth

-

News

NewsRivian fully-autonomous system pricing revealed

At the same price as Tesla's Full-Self Driving, is the Driver+ system good value?

-

News

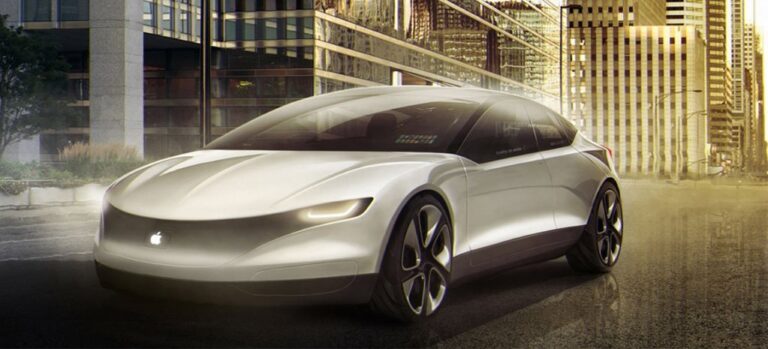

NewsApple slices autonomous program

Apple fires a third of its Project Titan autonomous team. Is this the end of the Apple Car?